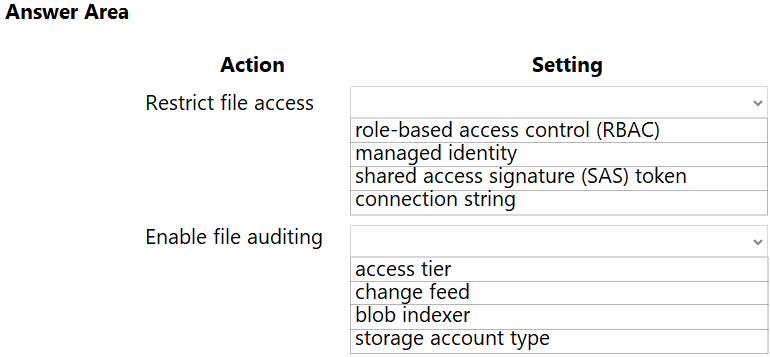

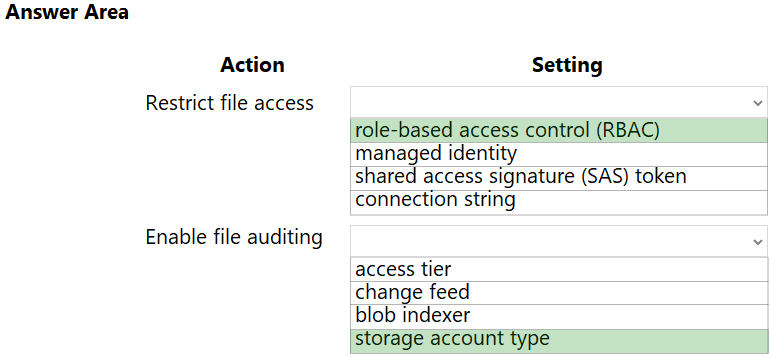

Box 1: role-based access control (RBAC)

Azure Storage supports authentication and authorization with Azure AD for the Blob and Queue services via Azure role-based access control (Azure RBAC).

Scenario: File access must restrict access by IP, protocol, and Azure AD rights.

Box 2: storage account type -

Scenario: The website uses files stored in Azure Storage

Auditing of the file updates and transfers must be enabled to comply with General Data Protection Regulation (GDPR).

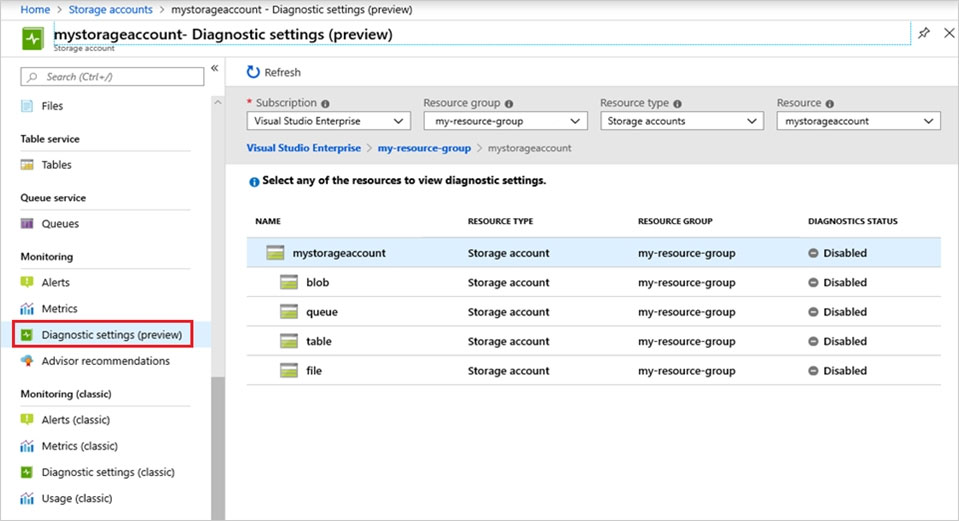

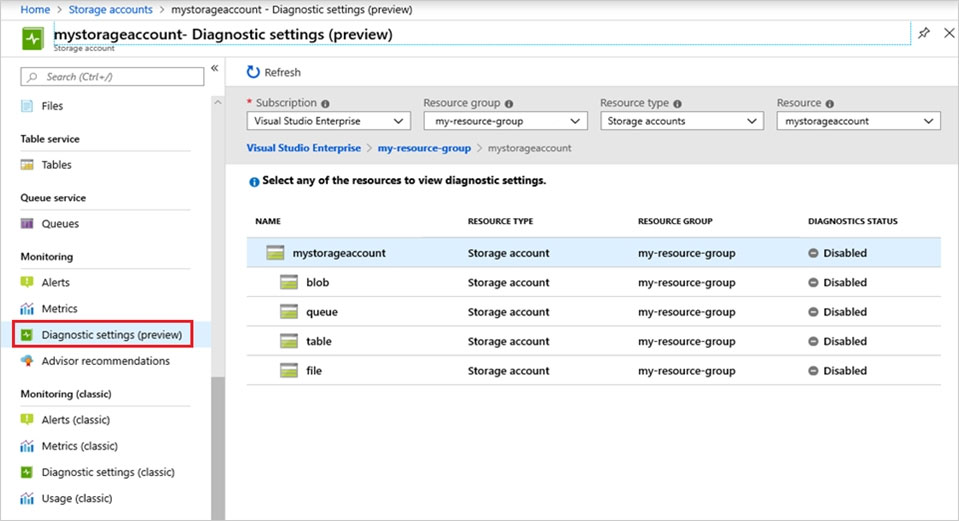

Creating a diagnostic setting:

1. Sign in to the Azure portal.

2. Navigate to your storage account.

3. In the Monitoring section, click Diagnostic settings (preview).

4. Choose file as the type of storage that you want to enable logs for.

5. Click Add diagnostic setting.

Reference:

https://docs.microsoft.com/en-us/azure/storage/common/storage-introduction

https://docs.microsoft.com/en-us/azure/storage/files/storage-files-monitoring