Question 171

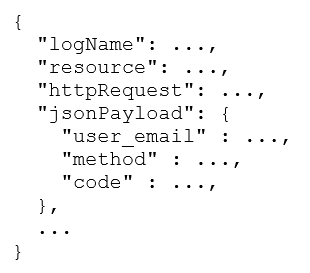

Your company runs an ecommerce business. The application responsible for payment processing has structured JSON logging with the following schema:

Capture and access of logs from the payment processing application is mandatory for operations, but the jsonPayload.user_email field contains personally identifiable information (PII). Your security team does not want the entire engineering team to have access to PII. You need to stop exposing PII to the engineering team and restrict access to security team members only. What should you do?

A. Apply the conditional role binding resource.name.extract("locations/global/buckets/{bucket}/") == "_Default" to the _Default bucket.

B. Apply a jsonPayload.user_email restricted field to the _Default bucket. Grant the Log Field Accessor role to the security team members.

C. Apply a jsonPayload.user_email exclusion filter to the _Default bucket.

D. Modify the application to toggle inclusion of user_email when the LOG_USER_EMAIL environment variable is set to true. Restrict the engineering team members who can change the production environment variable by using the CODEOWNERS file.

Question 172

Your organization is running multiple Google Kubernetes Engine (GKE) clusters in a project. You need to design a highly-available solution to collect and query both domain-specific workload metrics and GKE default metrics across all clusters, while minimizing operational overhead. What should you do?

A. Use Prometheus operator to install Prometheus in every cluster and scrape the metrics. Configure remote-write to one central Prometheus. Query the central Prometheus instance.

B. Enable managed collection on every GKE cluster. Query the metrics in BigQuery.

C. Use Prometheus operator to install Prometheus in every cluster and scrape the metrics. Ensure that a Thanos sidecar is enabled on every Prometheus instance. Configure Thanos in the central cluster. Query the central Thanos instance.

D. Enable managed collection on every GKE cluster. Query the metrics in Cloud Monitoring.

Question 173

Your company stores a large volume of infrequently used data in Cloud Storage. The projects in your company's CustomerService folder access Cloud Storage frequently, but store very little data. You want to enable Data Access audit logging across the company to identify data usage patterns. You need to exclude the CustomerService folder projects from Data Access audit logging. What should you do?

A. Enable Data Access audit logging for Cloud Storage at the organization level, and configure exempted principals to include users of the CustomerService folder.

B. Enable Data Access audit logging for Cloud Storage at the organization level, with no additional configuration.

C. Enable Data Access audit logging for Cloud Storage for all projects and folders other than the CustomerService folder.

D. Enable Data Access audit logging for Cloud Storage for all projects and folders, and configure exempted principals to include users of the CustomerService folder.

Question 174

You have an application running in production on Cloud Run. Your team recently finished developing a new version (revision B) of the application. You want to test the new revision on 10% of your clients by using the least amount of effort. What should you do?

A. Deploy the new revision to the existing service without traffic allocated. Tag the revision and share the URL with 10% of your clients.

B. Create a new service, and deploy the new revisions on the new service. Deploy a new revision of the old application where the application routes a percentage of the traffic to the new service.

C. Create a new service, and deploy the new revision on that new service. Create a load balancer to split the traffic between the old service and the new service.

D. Deploy the new revision to the existing service without traffic allocated. Split the traffic between the old revision and the new revision.

Question 175

You are designing a new multi-tenant Google Kubernetes Engine (GKE) cluster for a customer. Your customer is concerned with the risks associated with long-lived credentials use. The customer requires that each GKE workload has the minimum Identity and Access Management (IAM) permissions set following the principle of least privilege (PoLP). You need to design an IAM impersonation solution while following Google-recommended practices. What should you do?

A. 1. Create a Google service account.

2. Create a node pool, and set the Google service account as the default identity.

3. Ensure that workloads can only run on the designated node pool by using node selectors, taints, and tolerations.

4. Repeat for each workload.

B. 1. Create a Google service account.

2. Create a node pool without taints, and set the Google service account as the default identity.

3. Grant IAM permissions to the Google service account.

C. 1. Create a Google service account.

2. Create a Kubernetes service account in a Workload Identity-enabled cluster.

3. Link the Google service account with the Kubernetes service account by using the roles/iam.workloadIdentityUser role and iam.gke.io/gcp-service-account annotation.

4. Map the Kubernetes service account to the workload.

5. Repeat for each workload.

D. 1. Create a Google service account.

2. Create a service account key for the Google service account.

3. Create a Kubernetes secret with a service account key.

4. Ensure that workload mounts the secret and set the GOOGLE_APPLICATION_CREDENTIALS environment variable to point at the mount path.

5. Repeat for each workload.

Question 176

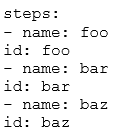

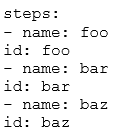

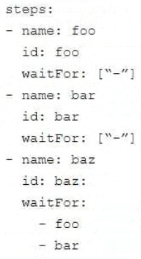

You are configuring a Cl pipeline in Cloud Build When you test the pipeline, the following cloudbuild.yaml definition results in 5 minutes each on the foo step and bar step

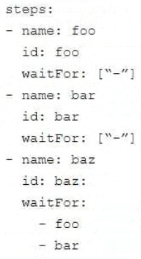

The foo step and bar step are independent of each other. The baz step needs both the foo and bar steps to be completed before starting. You want to use parallelism to reduce build times What should you do?

A. Modify the build script to add -

options:

machineType: 'E2_HIGHCPU_8'

B. Modify the build script to add -

options:

machineType: 'E2_HIGHCPU_32'

C.

D.

Question 177

You receive a Cloud Monitoring alert indicating potential malicious activity on a node in your Google Kubernetes Engine (GKE) cluster. The alert suggests a possible compromised container running on that node. You need to isolate this node to prevent further compromise while investigating the issue. You also want to minimize disruption to applications running on the cluster. What should you do?

A. Taint the suspicious node to prevent Pods that have interacted with it from being scheduled on other nodes in the cluster

B. Scale down the deployment associated with the compromised container to zero other nodes

C. Restart the node to disrupt the malicious activity, and force all Pods to be restructured on other nodes.

D. Cordon the node to prevent new Pods from being scheduled, the drain the node to safely remove existing Pods and reschedule them to other nodes.

Question 178

Your company has an application deployed on Google Kubernetes Engine (GKE) consisting of 12 microservices. Multiple teams are working concurrently on various features across three envi-ronments: Dev, Staging, and Prod. Developers report dependency test failures and delayed re-leases due to deployments from multiple feature branches in the shared Dev GKE cluster.

You need to implement a cost-effective solution for developers to test their microservice features in a stable development environment isolated from other development activities. What should you do?

A. Automate CI pipelines by using Cloud Build for container image creation and Kubernetes manifest updates from main branch merge requests. Integrate with Config Sync to test new im-ages in dynamically created namespaces on the Dev GKE cluster with autoscaling enabled. Im-plement a post-test namespace cleanup routine.

B. Automate CI pipelines by using Cloud Build to create container images and update Kuber-netes manifests for each commit. Use Cloud Deploy for progressive delivery to Dev, Staging, and Prod GKE clusters. Enable Config Sync for consistent Kubernetes configurations across en-vironments.

C. Use Cloud Build to automate CI pipelines and update Kubernetes manifest files from feature branch commits. Integrate with Config Sync to test new images in dynamically created namespaces on the Dev GKE cluster with autoscaling enabled. Implement a post-test namespace cleanup routine.

D. Use Cloud Build to automate CI pipelines and update Kubernetes manifest files from feature branch commits. Integrate with Config Sync to test new images in dynamically created GKE Dev clusters for each feature branch, which are deleted upon merge request.

Question 179

You are troubleshooting a failed deployment in your CI/CD pipeline. The deployment logs indicate that the application container failed to start due to a missing environment variable. You need to identify the root cause and implement a solution within your CI/CD workflow to prevent this issue from recurring. What should you do?

A. Use a canary deployment strategy.

B. Implement static code analysis in the CI pipeline.

C. Run integration tests in the CI pipeline.

D. Enable Cloud Audit Logs for the deployment.

Question 180

You work for a company that offers a free photo processing application. You are designing the infrastructure for the backend service that processes the photos. The service:

• Uses Cloud Storage to store both unprocessed and processed photos.

• Can resume processing photos in the event of a failure.

• Is not suitable for containerization.

There is no SLO for the time taken to process a photo. You need to choose the most cost-effective solution for running the service. What should you do?

A. Deploy the service by using Cloud Run.

B. Deploy the service by using standard VMs with a 3-year committed use discount.

C. Deploy the service by using GKE.

D. Deploy the service by using Spot VMs.