Question 71

Your company is planning to migrate their on-premises Hadoop environment to the cloud. Increasing storage cost and maintenance of data stored in HDFS is a major concern for your company. You also want to make minimal changes to existing data analytics jobs and existing architecture.

How should you proceed with the migration?

A. Migrate your data stored in Hadoop to BigQuery. Change your jobs to source their information from BigQuery instead of the on-premises Hadoop environment.

B. Create Compute Engine instances with HDD instead of SSD to save costs. Then perform a full migration of your existing environment into the new one in Compute Engine instances.

C. Create a Cloud Dataproc cluster on Google Cloud Platform, and then migrate your Hadoop environment to the new Cloud Dataproc cluster. Move your HDFS data into larger HDD disks to save on storage costs.

D. Create a Cloud Dataproc cluster on Google Cloud Platform, and then migrate your Hadoop code objects to the new cluster. Move your data to Cloud Storage and leverage the Cloud Dataproc connector to run jobs on that data.

Question 72

Your data is stored in Cloud Storage buckets. Fellow developers have reported that data downloaded from Cloud Storage is resulting in slow API performance.

You want to research the issue to provide details to the GCP support team.

Which command should you run?

A. gsutil test -o output.json gs://my-bucket

B. gsutil perfdiag -o output.json gs://my-bucket

C. gcloud compute scp example-instance:~/test-data -o output.json gs://my-bucket

D. gcloud services test -o output.json gs://my-bucket

Question 73

You are using Cloud Build build to promote a Docker image to Development, Test, and Production environments. You need to ensure that the same Docker image is deployed to each of these environments.

How should you identify the Docker image in your build?

A. Use the latest Docker image tag.

B. Use a unique Docker image name.

C. Use the digest of the Docker image.

D. Use a semantic version Docker image tag.

Question 74

Your company has created an application that uploads a report to a Cloud Storage bucket. When the report is uploaded to the bucket, you want to publish a message to a Cloud Pub/Sub topic. You want to implement a solution that will take a small amount to effort to implement.

What should you do?

A. Configure the Cloud Storage bucket to trigger Cloud Pub/Sub notifications when objects are modified.

B. Create an App Engine application to receive the file; when it is received, publish a message to the Cloud Pub/Sub topic.

C. Create a Cloud Function that is triggered by the Cloud Storage bucket. In the Cloud Function, publish a message to the Cloud Pub/Sub topic.

D. Create an application deployed in a Google Kubernetes Engine cluster to receive the file; when it is received, publish a message to the Cloud Pub/Sub topic.

Question 75

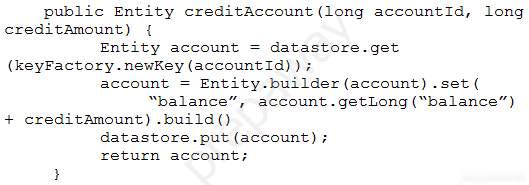

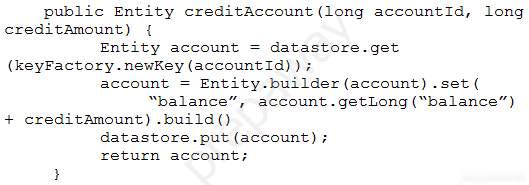

Your teammate has asked you to review the code below, which is adding a credit to an account balance in Cloud Datastore.

Which improvement should you suggest your teammate make?

A. Get the entity with an ancestor query.

B. Get and put the entity in a transaction.

C. Use a strongly consistent transactional database.

D. Don't return the account entity from the function.

Question 76

Your company stores their source code in a Cloud Source Repositories repository. Your company wants to build and test their code on each source code commit to the repository and requires a solution that is managed and has minimal operations overhead.

Which method should they use?

A. Use Cloud Build with a trigger configured for each source code commit.

B. Use Jenkins deployed via the Google Cloud Platform Marketplace, configured to watch for source code commits.

C. Use a Compute Engine virtual machine instance with an open source continuous integration tool, configured to watch for source code commits.

D. Use a source code commit trigger to push a message to a Cloud Pub/Sub topic that triggers an App Engine service to build the source code.

Question 77

You are writing a Compute Engine hosted application in project A that needs to securely authenticate to a Cloud Pub/Sub topic in project B.

What should you do?

A. Configure the instances with a service account owned by project B. Add the service account as a Cloud Pub/Sub publisher to project A.

B. Configure the instances with a service account owned by project A. Add the service account as a publisher on the topic.

C. Configure Application Default Credentials to use the private key of a service account owned by project B. Add the service account as a Cloud Pub/Sub publisher to project A.

D. Configure Application Default Credentials to use the private key of a service account owned by project A. Add the service account as a publisher on the topic

Question 78

You are developing a corporate tool on Compute Engine for the finance department, which needs to authenticate users and verify that they are in the finance department. All company employees use G Suite.

What should you do?

A. Enable Cloud Identity-Aware Proxy on the HTTP(s) load balancer and restrict access to a Google Group containing users in the finance department. Verify the provided JSON Web Token within the application.

B. Enable Cloud Identity-Aware Proxy on the HTTP(s) load balancer and restrict access to a Google Group containing users in the finance department. Issue client-side certificates to everybody in the finance team and verify the certificates in the application.

C. Configure Cloud Armor Security Policies to restrict access to only corporate IP address ranges. Verify the provided JSON Web Token within the application.

D. Configure Cloud Armor Security Policies to restrict access to only corporate IP address ranges. Issue client side certificates to everybody in the finance team and verify the certificates in the application.

Question 79

Your API backend is running on multiple cloud providers. You want to generate reports for the network latency of your API.

Which two steps should you take? (Choose two.)

A. Use Zipkin collector to gather data.

B. Use Fluentd agent to gather data.

C. Use Stackdriver Trace to generate reports.

D. Use Stackdriver Debugger to generate report.

E. Use Stackdriver Profiler to generate report.

Question 80

Case study -

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study -

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an

All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Company Overview -

HipLocal is a community application designed to facilitate communication between people in close proximity. It is used for event planning and organizing sporting events, and for businesses to connect with their local communities. HipLocal launched recently in a few neighborhoods in Dallas and is rapidly growing into a global phenomenon. Its unique style of hyper-local community communication and business outreach is in demand around the world.

Executive Statement -

We are the number one local community app; it's time to take our local community services global. Our venture capital investors want to see rapid growth and the same great experience for new local and virtual communities that come online, whether their members are 10 or 10000 miles away from each other.

Solution Concept -

HipLocal wants to expand their existing service, with updated functionality, in new regions to better serve their global customers. They want to hire and train a new team to support these regions in their time zones. They will need to ensure that the application scales smoothly and provides clear uptime data.

Existing Technical Environment -

HipLocal's environment is a mix of on-premises hardware and infrastructure running in Google Cloud Platform. The HipLocal team understands their application well, but has limited experience in global scale applications. Their existing technical environment is as follows:

* Existing APIs run on Compute Engine virtual machine instances hosted in GCP.

* State is stored in a single instance MySQL database in GCP.

* Data is exported to an on-premises Teradata/Vertica data warehouse.

* Data analytics is performed in an on-premises Hadoop environment.

* The application has no logging.

* There are basic indicators of uptime; alerts are frequently fired when the APIs are unresponsive.

Business Requirements -

HipLocal's investors want to expand their footprint and support the increase in demand they are seeing. Their requirements are:

* Expand availability of the application to new regions.

* Increase the number of concurrent users that can be supported.

* Ensure a consistent experience for users when they travel to different regions.

* Obtain user activity metrics to better understand how to monetize their product.

* Ensure compliance with regulations in the new regions (for example, GDPR).

* Reduce infrastructure management time and cost.

* Adopt the Google-recommended practices for cloud computing.

Technical Requirements -

* The application and backend must provide usage metrics and monitoring.

* APIs require strong authentication and authorization.

* Logging must be increased, and data should be stored in a cloud analytics platform.

* Move to serverless architecture to facilitate elastic scaling.

* Provide authorized access to internal apps in a secure manner.

Which database should HipLocal use for storing user activity?

A. BigQuery

B. Cloud SQL

C. Cloud Spanner

D. Cloud Datastore