Question 241

You have deployed a Java application to Cloud Run. Your application requires access to a database hosted on Cloud SQL. Due to regulatory requirements, your connection to the Cloud SQL instance must use its internal IP address. How should you configure the connectivity while following Google-recommended best practices?

A. Configure your Cloud Run service with a Cloud SQL connection.

B. Configure your Cloud Run service to use a Serverless VPC Access connector.

C. Configure your application to use the Cloud SQL Java connector.

D. Configure your application to connect to an instance of the Cloud SQL Auth proxy.

Question 242

Your application stores customers’ content in a Cloud Storage bucket, with each object being encrypted with the customer's encryption key. The key for each object in Cloud Storage is entered into your application by the customer. You discover that your application is receiving an HTTP 4xx error when reading the object from Cloud Storage. What is a possible cause of this error?

A. You attempted the read operation on the object with the customer's base64-encoded key.

B. You attempted the read operation without the base64-encoded SHA256 hash of the encryption key.

C. You entered the same encryption algorithm specified by the customer when attempting the read operation.

D. You attempted the read operation on the object with the base64-encoded SHA256 hash of the customer's key.

Question 243

You have two Google Cloud projects, named Project A and Project B. You need to create a Cloud Function in Project A that saves the output in a Cloud Storage bucket in Project B. You want to follow the principle of least privilege. What should you do?

A. 1. Create a Google service account in Project B.

2. Deploy the Cloud Function with the service account in Project A.

3. Assign this service account the roles/storage.objectCreator role on the storage bucket residing in Project B.

B. 1. Create a Google service account in Project A

2. Deploy the Cloud Function with the service account in Project A.

3. Assign this service account the roles/storage.objectCreator role on the storage bucket residing in Project B.

C. 1. Determine the default App Engine service account ([email protected]) in Project A.

2. Deploy the Cloud Function with the default App Engine service account in Project A.

3. Assign the default App Engine service account the roles/storage.objectCreator role on the storage bucket residing in Project B.

D. 1. Determine the default App Engine service account ([email protected]) in Project B.

2. Deploy the Cloud Function with the default App Engine service account in Project A.

3. Assign the default App Engine service account the roles/storage.objectCreator role on the storage bucket residing in Project B.

Question 244

A governmental regulation was recently passed that affects your application. For compliance purposes, you are now required to send a duplicate of specific application logs from your application’s project to a project that is restricted to the security team. What should you do?

A. Create user-defined log buckets in the security team’s project. Configure a Cloud Logging sink to route your application’s logs to log buckets in the security team’s project.

B. Create a job that copies the logs from the _Required log bucket into the security team’s log bucket in their project.

C. Modify the _Default log bucket sink rules to reroute the logs into the security team’s log bucket.

D. Create a job that copies the System Event logs from the _Required log bucket into the security team’s log bucket in their project.

Question 245

You plan to deploy a new Go application to Cloud Run. The source code is stored in Cloud Source Repositories. You need to configure a fully managed, automated, continuous deployment pipeline that runs when a source code commit is made. You want to use the simplest deployment solution. What should you do?

A. Configure a cron job on your workstations to periodically run gcloud run deploy --source in the working directory.

B. Configure a Jenkins trigger to run the container build and deploy process for each source code commit to Cloud Source Repositories.

C. Configure continuous deployment of new revisions from a source repository for Cloud Run using buildpacks.

D. Use Cloud Build with a trigger configured to run the container build and deploy process for each source code commit to Cloud Source Repositories.

Question 246

Your team has created an application that is hosted on a Google Kubernetes Engine (GKE) cluster. You need to connect the application to a legacy REST service that is deployed in two GKE clusters in two different regions. You want to connect your application to the target service in a way that is resilient. You also want to be able to run health checks on the legacy service on a separate port. How should you set up the connection? (Choose two.)

A. Use Traffic Director with a sidecar proxy to connect the application to the service.

B. Use a proxyless Traffic Director configuration to connect the application to the service.

C. Configure the legacy service's firewall to allow health checks originating from the proxy.

D. Configure the legacy service's firewall to allow health checks originating from the application.

E. Configure the legacy service's firewall to allow health checks originating from the Traffic Director control plane.

Question 247

You have an application running in a production Google Kubernetes Engine (GKE) cluster. You use Cloud Deploy to automatically deploy your application to your production GKE cluster. As part of your development process, you are planning to make frequent changes to the application’s source code and need to select the tools to test the changes before pushing them to your remote source code repository. Your toolset must meet the following requirements:

• Test frequent local changes automatically.

• Local deployment emulates production deployment.

Which tools should you use to test building and running a container on your laptop using minimal resources?

A. Docker Compose and dockerd

B. Terraform and kubeadm

C. Minikube and Skaffold

D. kaniko and Tekton

Question 248

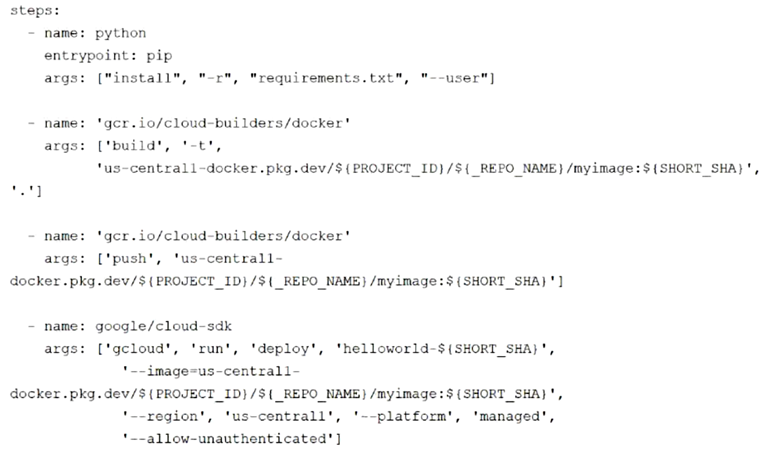

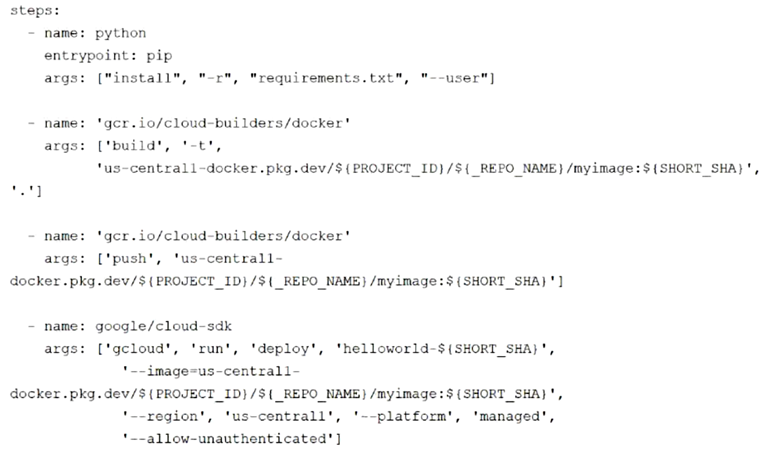

You are deploying a Python application to Cloud Run using Cloud Source Repositories and Cloud Build. The Cloud Build pipeline is shown below:

You want to optimize deployment times and avoid unnecessary steps. What should you do?

A. Remove the step that pushes the container to Artifact Registry.

B. Deploy a new Docker registry in a VPC, and use Cloud Build worker pools inside the VPC to run the build pipeline.

C. Store image artifacts in a Cloud Storage bucket in the same region as the Cloud Run instance.

D. Add the --cache-from argument to the Docker build step in your build config file.

Question 249

You are developing an event-driven application. You have created a topic to receive messages sent to Pub/Sub. You want those messages to be processed in real time. You need the application to be independent from any other system and only incur costs when new messages arrive. How should you configure the architecture?

A. Deploy the application on Compute Engine. Use a Pub/Sub push subscription to process new messages in the topic.

B. Deploy your code on Cloud Functions. Use a Pub/Sub trigger to invoke the Cloud Function. Use the Pub/Sub API to create a pull subscription to the Pub/Sub topic and read messages from it.

C. Deploy the application on Google Kubernetes Engine. Use the Pub/Sub API to create a pull subscription to the Pub/Sub topic and read messages from it.

D. Deploy your code on Cloud Functions. Use a Pub/Sub trigger to handle new messages in the topic.

Question 250

You have an application running on Google Kubernetes Engine (GKE). The application is currently using a logging library and is outputting to standard output. You need to export the logs to Cloud Logging, and you need the logs to include metadata about each request. You want to use the simplest method to accomplish this. What should you do?

A. Change your application’s logging library to the Cloud Logging library, and configure your application to export logs to Cloud Logging.

B. Update your application to output logs in JSON format, and add the necessary metadata to the JSON.

C. Update your application to output logs in CSV format, and add the necessary metadata to the CSV.

D. Install the Fluent Bit agent on each of your GKE nodes, and have the agent export all logs from /var/log.