Question 201

Your company uses Cloud Logging to manage large volumes of log data. You need to build a real-time log analysis architecture that pushes logs to a third-party application for processing. What should you do?

A. Create a Cloud Logging log export to Pub/Sub.

B. Create a Cloud Logging log export to BigQuery.

C. Create a Cloud Logging log export to Cloud Storage.

D. Create a Cloud Function to read Cloud Logging log entries and send them to the third-party application.

Question 202

You are developing a new public-facing application that needs to retrieve specific properties in the metadata of users’ objects in their respective Cloud Storage buckets. Due to privacy and data residency requirements, you must retrieve only the metadata and not the object data. You want to maximize the performance of the retrieval process. How should you retrieve the metadata?

A. Use the patch method.

B. Use the compose method.

C. Use the copy method.

D. Use the fields request parameter.

Question 203

You are deploying a microservices application to Google Kubernetes Engine (GKE) that will broadcast livestreams. You expect unpredictable traffic patterns and large variations in the number of concurrent users. Your application must meet the following requirements:

• Scales automatically during popular events and maintains high availability

• Is resilient in the event of hardware failures

How should you configure the deployment parameters? (Choose two.)

A. Distribute your workload evenly using a multi-zonal node pool.

B. Distribute your workload evenly using multiple zonal node pools.

C. Use cluster autoscaler to resize the number of nodes in the node pool, and use a Horizontal Pod Autoscaler to scale the workload.

D. Create a managed instance group for Compute Engine with the cluster nodes. Configure autoscaling rules for the managed instance group.

E. Create alerting policies in Cloud Monitoring based on GKE CPU and memory utilization. Ask an on-duty engineer to scale the workload by executing a script when CPU and memory usage exceed predefined thresholds.

Question 204

You work at a rapidly growing financial technology startup. You manage the payment processing application written in Go and hosted on Cloud Run in the Singapore region (asia-southeast1). The payment processing application processes data stored in a Cloud Storage bucket that is also located in the Singapore region.

The startup plans to expand further into the Asia Pacific region. You plan to deploy the Payment Gateway in Jakarta, Hong Kong, and Taiwan over the next six months. Each location has data residency requirements that require customer data to reside in the country where the transaction was made. You want to minimize the cost of these deployments. What should you do?

A. Create a Cloud Storage bucket in each region, and create a Cloud Run service of the payment processing application in each region.

B. Create a Cloud Storage bucket in each region, and create three Cloud Run services of the payment processing application in the Singapore region.

C. Create three Cloud Storage buckets in the Asia multi-region, and create three Cloud Run services of the payment processing application in the Singapore region.

D. Create three Cloud Storage buckets in the Asia multi-region, and create three Cloud Run revisions of the payment processing application in the Singapore region.

Question 205

You recently joined a new team that has a Cloud Spanner database instance running in production. Your manager has asked you to optimize the Spanner instance to reduce cost while maintaining high reliability and availability of the database. What should you do?

A. Use Cloud Logging to check for error logs, and reduce Spanner processing units by small increments until you find the minimum capacity required.

B. Use Cloud Trace to monitor the requests per sec of incoming requests to Spanner, and reduce Spanner processing units by small increments until you find the minimum capacity required.

C. Use Cloud Monitoring to monitor the CPU utilization, and reduce Spanner processing units by small increments until you find the minimum capacity required.

D. Use Snapshot Debugger to check for application errors, and reduce Spanner processing units by small increments until you find the minimum capacity required.

Question 206

You recently deployed a Go application on Google Kubernetes Engine (GKE). The operations team has noticed that the application's CPU usage is high even when there is low production traffic. The operations team has asked you to optimize your application's CPU resource consumption. You want to determine which Go functions consume the largest amount of CPU. What should you do?

A. Deploy a Fluent Bit daemonset on the GKE cluster to log data in Cloud Logging. Analyze the logs to get insights into your application code’s performance.

B. Create a custom dashboard in Cloud Monitoring to evaluate the CPU performance metrics of your application.

C. Connect to your GKE nodes using SSH. Run the top command on the shell to extract the CPU utilization of your application.

D. Modify your Go application to capture profiling data. Analyze the CPU metrics of your application in flame graphs in Profiler.

Question 207

Your team manages a Google Kubernetes Engine (GKE) cluster where an application is running. A different team is planning to integrate with this application. Before they start the integration, you need to ensure that the other team cannot make changes to your application, but they can deploy the integration on GKE. What should you do?

A. Using Identity and Access Management (IAM), grant the Viewer IAM role on the cluster project to the other team.

B. Create a new GKE cluster. Using Identity and Access Management (IAM), grant the Editor role on the cluster project to the other team.

C. Create a new namespace in the existing cluster. Using Identity and Access Management (IAM), grant the Editor role on the cluster project to the other team.

D. Create a new namespace in the existing cluster. Using Kubernetes role-based access control (RBAC), grant the Admin role on the new namespace to the other team.

Question 208

You have recently instrumented a new application with OpenTelemetry, and you want to check the latency of your application requests in Trace. You want to ensure that a specific request is always traced. What should you do?

A. Wait 10 minutes, then verify that Trace captures those types of requests automatically.

B. Write a custom script that sends this type of request repeatedly from your dev project.

C. Use the Trace API to apply custom attributes to the trace.

D. Add the X-Cloud-Trace-Context header to the request with the appropriate parameters.

Question 209

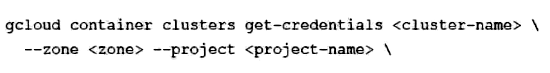

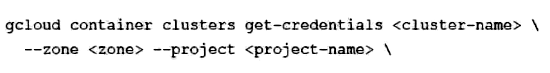

You are trying to connect to your Google Kubernetes Engine (GKE) cluster using kubectl from Cloud Shell. You have deployed your GKE cluster with a public endpoint. From Cloud Shell, you run the following command:

You notice that the kubectl commands time out without returning an error message. What is the most likely cause of this issue?

A. Your user account does not have privileges to interact with the cluster using kubectl.

B. Your Cloud Shell external IP address is not part of the authorized networks of the cluster.

C. The Cloud Shell is not part of the same VPC as the GKE cluster.

D. A VPC firewall is blocking access to the cluster’s endpoint.

Question 210

You are developing a web application that contains private images and videos stored in a Cloud Storage bucket. Your users are anonymous and do not have Google Accounts. You want to use your application-specific logic to control access to the images and videos. How should you configure access?

A. Cache each web application user's IP address to create a named IP table using Google Cloud Armor. Create a Google Cloud Armor security policy that allows users to access the backend bucket.

B. Grant the Storage Object Viewer IAM role to allUsers. Allow users to access the bucket after authenticating through your web application.

C. Configure Identity-Aware Proxy (IAP) to authenticate users into the web application. Allow users to access the bucket after authenticating through IAP.

D. Generate a signed URL that grants read access to the bucket. Allow users to access the URL after authenticating through your web application.