Question 31

A company recently launched an application that is more popular than expected. The company wants to ensure the application can scale to meet increasing demands and provide reliability using multiple Availability Zones (AZs). The application runs on a fleet of Amazon EC2 instances behind an Application LoadBalancer (ALB). A DevOps engineer has created an Auto Scaling group across multiple AZs for the application. Instances launched in the newly added AZs are not receiving any traffic for the application.

What is likely causing this issue?

A. Auto Scaling groups can create new instances in a single AZ only.

B. The EC2 instances have not been manually associated to the ALB.

C. The ALB should be replaced with a Network Load Balancer (NLB).

D. The new AZ has not been added to the ALB.

Question 32

A DevOps Engineer manages a web application that runs on Amazon EC2 instances behind an Application Load Balancer (ALB). The instances run in an EC2Auto Scaling group across multiple Availability Zones. The engineer needs to implement a deployment strategy that:

- Launches a second fleet of instances with the same capacity as the original fleet.

- Maintains the original fleet unchanged while the second fleet is launched.

- Transitions traffic to the second fleet when the second fleet is fully deployed.

- Terminates the original fleet automatically 1 hour after transition.

Which solution will satisfy these requirements?

A. Use an AWS CloudFormation template with a retention policy for the ALB set to 1 hour. Update the Amazon Route 53 record to reflect the new ALB.

B. Use two AWS Elastic Beanstalk environments to perform a blue/green deployment from the original environment to the new one. Create an application version lifecycle policy to terminate the original environment in 1 hour.

C. Use AWS CodeDeploy with a deployment group configured with a blue/green deployment configuration. Select the option Terminate the original instances in the deployment group with a waiting period of 1 hour.

D. Use AWS Elastic Beanstalk with the configuration set to Immutable. Create an .ebextension using the Resources key that sets the deletion policy of the ALB to 1 hour, and deploy the application.

Question 33

A healthcare services company is concerned about the growing costs of software licensing for an application for monitoring patient wellness. The company wants to create an audit process to ensure that the application is running exclusively on Amazon EC2 Dedicated Hosts. A DevOps Engineer must create a workflow to audit the application to ensure compliance.

What steps should the Engineer take to meet this requirement with the LEAST administrative overhead?

A. Use AWS Systems Manager Configuration Compliance. Use calls to the put-compliance-items API action to scan and build a database of noncompliant EC2 instances based on their host placement configuration. Use an Amazon DynamoDB table to store these instance IDs for fast access. Generate a report through Systems Manager by calling the list-compliance-summaries API action.

B. Use custom Java code running on an EC2 instance. Set up EC2 Auto Scaling for the instance depending on the number of instances to be checked. Send the list of noncompliant EC2 instance IDs to an Amazon SQS queue. Set up another worker instance to process instance IDs from the SQS queue and write them to Amazon DynamoDB. Use an AWS Lambda function to terminate noncompliant instance IDs obtained from the queue, and send them to an Amazon SNS email topic for distribution.

C. Use AWS Config. Identify all EC2 instances to be audited by enabling Config Recording on all Amazon EC2 resources for the region. Create a custom AWS Config rule that triggers an AWS Lambda function by using the "config-rule-change-triggered" blueprint. Modify the Lambda evaluateCompliance() function to verify host placement to return a NON_COMPLIANT result if the instance is not running on an EC2 Dedicated Host. Use the AWS Config report to address noncompliant instances.

D. Use AWS CloudTrail. Identify all EC2 instances to be audited by analyzing all calls to the EC2 RunCommand API action. Invoke an AWS Lambda function that analyzes the host placement of the instance. Store the EC2 instance ID of noncompliant resources in an Amazon RDS MySQL DB instance. Generate a report by querying the RDS instance and exporting the query results to a CSV text file.

Question 34

A company has 100 GB of log data in an Amazon S3 bucket stored in .

csv format. SQL developers want to query this data and generate graphs to visualize it.

They also need an efficient, automated way to store metadata from the .

csv file.

Which combination of steps should be taken to meet these requirements with the LEAST amount of effort? (Choose three.)

A. Filter the data through AWS X-Ray to visualize the data.

B. Filter the data through Amazon QuickSight to visualize the data.

C. Query the data with Amazon Athena.

D. Query the data with Amazon Redshift.

E. Use AWS Glue as the persistent metadata store.

F. Use Amazon S3 as the persistent metadata store.

Question 35

A DevOps Engineer has several legacy applications that all generate different log formats. The Engineer must standardize the formats before writing them toAmazon S3 for querying and analysis.

How can this requirement be met at the LOWEST cost?

A. Have the application send its logs to an Amazon EMR cluster and normalize the logs before sending them to Amazon S3

B. Have the application send its logs to Amazon QuickSight, then use the Amazon QuickSight SPICE engine to normalize the logs. Do the analysis directly from Amazon QuickSight

C. Keep the logs in Amazon S3 and use Amazon Redshift Spectrum to normalize the logs in place

D. Use Amazon Kinesis Agent on each server to upload the logs and have Amazon Kinesis Data Firehose use an AWS Lambda function to normalize the logs before writing them to Amazon S3

Question 36

A company needs to implement a robust CI/CD pipeline to automate the deployment of an application in AWS. The pipeline must support continuous integration, continuous delivery, and automatic rollback upon deployment failure. The entire CI/CD pipeline must be capable of being re-provisioned in alternate AWS accounts or Regions within minutes. A DevOps engineer has already created an AWS CodeCommit repository to store the source code.

Which combination of actions should be taken when building this pipeline to meet these requirements? (Choose three.)

A. Configure an AWS CodePipeline pipeline with a build stage using AWS CodeBuild.

B. Copy the build artifact from CodeCommit to Amazon S3.

C. Create an Auto Scaling group of Amazon EC2 instances behind an Application Load Balancer (ALB) and set the ALB as the deployment target in AWS CodePipeline.

D. Create an AWS Elastic Beanstalk environment as the deployment target in AWS CodePipeline.

E. Implement an Amazon SQS queue to decouple the pipeline components.

F. Provision all resources using AWS CloudFormation.

Question 37

A company is building a solution for storing files containing Personally Identifiable Information (PII) on AWS.

Requirements state:

- All data must be encrypted at rest and in transit.

- All data must be replicated in at least two __cpLocations that are at least 500 miles (805 kilometers) apart.

Which solution meets these requirements?

A. Create primary and secondary Amazon S3 buckets in two separate Availability Zones that are at least 500 miles (805 kilometers) apart. Use a bucket policy to enforce access to the buckets only through HTTPS. Use a bucket policy to enforce Amazon S3 SSE-C on all objects uploaded to the bucket. Configure cross- region replication between the two buckets.

B. Create primary and secondary Amazon S3 buckets in two separate AWS Regions that are at least 500 miles (805 kilometers) apart. Use a bucket policy to enforce access to the buckets only through HTTPS. Use a bucket policy to enforce S3-Managed Keys (SSE-S3) on all objects uploaded to the bucket. Configure cross-region replication between the two buckets.

C. Create primary and secondary Amazon S3 buckets in two separate AWS Regions that are at least 500 miles (805 kilometers) apart. Use an IAM role to enforce access to the buckets only through HTTPS. Use a bucket policy to enforce Amazon S3-Managed Keys (SSE-S3) on all objects uploaded to the bucket. Configure cross-region replication between the two buckets.

D. Create primary and secondary Amazon S3 buckets in two separate Availability Zones that are at least 500 miles (805 kilometers) apart. Use a bucket policy to enforce access to the buckets only through HTTPS. Use a bucket policy to enforce AWS KMS encryption on all objects uploaded to the bucket. Configure cross-region replication between the two buckets. Create a KMS Customer Master Key (CMK) in the primary region for encrypting objects.

Question 38

A company is using an AWS CodeBuild project to build and package an application. The packages are copied to a shared Amazon S3 bucket before being deployed across multiple AWS accounts.

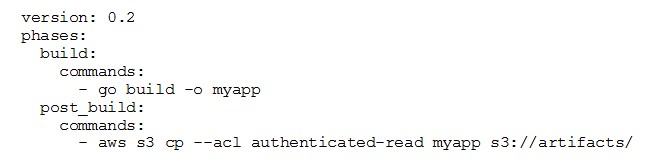

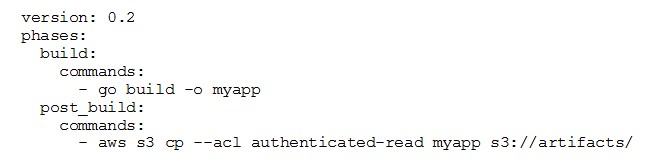

The buildspec.yml file contains the following:

The DevOps Engineer has noticed that anybody with an AWS account is able to download the artifacts.

What steps should the DevOps Engineer take to stop this?

A. Modify the post_build to command to use ""-acl public-read and configure a bucket policy that grants read access to the relevant AWS accounts only.

B. Configure a default ACL for the S3 bucket that defines the set of authenticated users as the relevant AWS accounts only and grants read-only access.

C. Create an S3 bucket policy that grants read access to the relevant AWS accounts and denies read access to the principal "*"

D. Modify the post_build command to remove ""-acl authenticated-read and configure a bucket policy that allows read access to the relevant AWS accounts only.

Question 39

A DevOps engineer needs to grant several external contractors access to a legacy application that runs on an Amazon Linux Amazon EC2 instance. The application server is available only in a private subnet. The contractors are not authorized for VPN access.

What should the DevOps engineer do to grant the contactors access to the application server?

A. Create an IAM user and SSH keys for each contractor. Add the public SSH key to the application server's SSH authorized_keys file. Instruct the contractors to install the AWS CLI and AWS Systems Manager Session Manager plugin, update their AWS credentials files with their private keys, and use the aws ssm start-session command to gain access to the target application server instance ID.

B. Ask each contractor to securely send their SSH public key. Add this public key to the application server's SSH authorized-keys file. Instruct the contractors to use their private key to connect to the application server through SSH.

C. Ask each contractor to securely send their SSH public key. Use EC2 pairs to import their key. Update the application server's SSH authorized_keys file. Instruct the contractors to use their private key to connect to the application server through SSH.

D. Create an IAM user for each contractor with programmatic access. Add each user to an IAM group that has a policy that allows the ssm:StartSession action. Instruct the contractors to install the AWS CLI and AWS Systems Manager Session Manager plugin, update their AWS credentials files with their access keys, and use the aws ssm start-session to gain access to the target application server instance ID.

Question 40

A company hosts its staging website using an Amazon EC2 instance backed with Amazon EBS storage. The company wants to recover quickly with minimal data losses in the event of network connectivity issues or power failures on the EC2 instance.

Which solution will meet these requirements?

A. Add the instance to an EC2 Auto Scaling group with the minimum, maximum, and desired capacity set to 1.

B. Add the instance to an EC2 Auto Scaling group with a lifecycle hook to detach the EBS volume when the EC2 instance shuts down or terminates.

C. Create an Amazon CloudWatch alarm for the StatusCheckFailed_System metric and select the EC2 action to recover the instance.

D. Create an Amazon CloudWatch alarm for the StatusCheckFailed_Instance metric and select the EC2 action to reboot the instance.