Question 11

Your company has developed a website that allows users to upload and share video files. These files are most frequently accessed and shared when they are initially uploaded. Over time, the files are accessed and shared less frequently, although some old video files may remain very popular.

You need to design a storage system that is simple and cost-effective. What should you do?

A. Create a single-region bucket with Autoclass enabled.

B. Create a single-region bucket. Configure a Cloud Scheduler job that runs every 24 hours and changes the storage class based on upload date.

C. Create a single-region bucket with custom Object Lifecycle Management policies based on upload date.

D. Create a single-region bucket with Archive as the default storage class.

Question 12

You recently inherited a task for managing Dataflow streaming pipelines in your organization and noticed that proper access had not been provisioned to you. You need to request a Google-provided IAM role so you can restart the pipelines. You need to follow the principle of least privilege. What should you do?

A. Request the Dataflow Developer role.

B. Request the Dataflow Viewer role.

C. Request the Dataflow Worker role.

D. Request the Dataflow Admin role.

Question 13

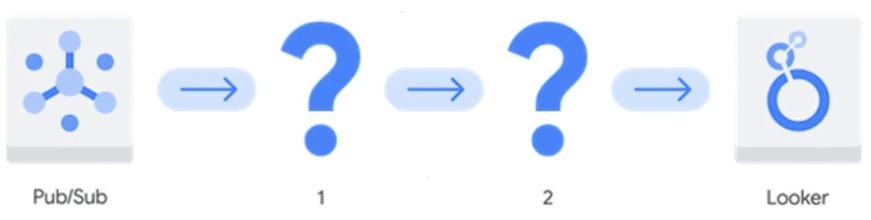

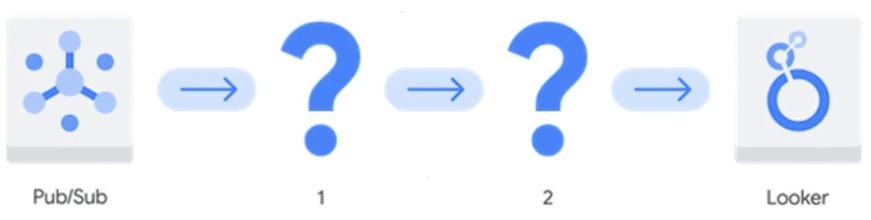

You need to create a new data pipeline. You want a serverless solution that meets the following requirements:

• Data is streamed from Pub/Sub and is processed in real-time.

• Data is transformed before being stored.

• Data is stored in a location that will allow it to be analyzed with SQL using Looker.

Which Google Cloud services should you recommend for the pipeline?

A. 1. Dataproc Serverless

2. Bigtable

B. 1. Cloud Composer

2. Cloud SQL for MySQL

C. 1. BigQuery

2. Analytics Hub

D. 1. Dataflow

2. BigQuery

Question 14

Your team wants to create a monthly report to analyze inventory data that is updated daily. You need to aggregate the inventory counts by using only the most recent month of data, and save the results to be used in a Looker Studio dashboard. What should you do?

A. Create a materialized view in BigQuery that uses the SUM( ) function and the DATE_SUB( ) function.

B. Create a saved query in the BigQuery console that uses the SUM( ) function and the DATE_SUB( ) function. Re-run the saved query every month, and save the results to a BigQuery table.

C. Create a BigQuery table that uses the SUM( ) function and the _PARTITIONDATE filter.

D. Create a BigQuery table that uses the SUM( ) function and the DATE_DIFF( ) function.

Question 15

You have a BigQuery dataset containing sales data. This data is actively queried for the first 6 months. After that, the data is not queried but needs to be retained for 3 years for compliance reasons. You need to implement a data management strategy that meets access and compliance requirements, while keeping cost and administrative overhead to a minimum. What should you do?

A. Use BigQuery long-term storage for the entire dataset. Set up a Cloud Run function to delete the data from BigQuery after 3 years.

B. Partition a BigQuery table by month. After 6 months, export the data to Coldline storage. Implement a lifecycle policy to delete the data from Cloud Storage after 3 years.

C. Set up a scheduled query to export the data to Cloud Storage after 6 months. Write a stored procedure to delete the data from BigQuery after 3 years.

D. Store all data in a single BigQuery table without partitioning or lifecycle policies.

Question 16

You have created a LookML model and dashboard that shows daily sales metrics for five regional managers to use. You want to ensure that the regional managers can only see sales metrics specific to their region. You need an easy-to-implement solution. What should you do?

A. Create a sales_region user attribute, and assign each manager’s region as the value of their user attribute. Add an access_filter Explore filter on the region_name dimension by using the sales_region user attribute.

B. Create five different Explores with the sql_always_filter Explore filter applied on the region_name dimension. Set each region_name value to the corresponding region for each manager.

C. Create separate Looker dashboards for each regional manager. Set the default dashboard filter to the corresponding region for each manager.

D. Create separate Looker instances for each regional manager. Copy the LookML model and dashboard to each instance. Provision viewer access to the corresponding manager.

Question 17

You need to design a data pipeline that ingests data from CSV, Avro, and Parquet files into Cloud Storage. The data includes raw user input. You need to remove all malicious SQL injections before storing the data in BigQuery. Which data manipulation methodology should you choose?

A. EL

B. ELT

C. ETL

D. ETLT

Question 18

You are working with a large dataset of customer reviews stored in Cloud Storage. The dataset contains several inconsistencies, such as missing values, incorrect data types, and duplicate entries. You need to clean the data to ensure that it is accurate and consistent before using it for analysis. What should you do?

A. Use the PythonOperator in Cloud Composer to clean the data and load it into BigQuery. Use SQL for analysis.

B. Use BigQuery to batch load the data into BigQuery. Use SQL for cleaning and analysis.

C. Use Storage Transfer Service to move the data to a different Cloud Storage bucket. Use event triggers to invoke Cloud Run functions to load the data into BigQuery. Use SQL for analysis.

D. Use Cloud Run functions to clean the data and load it into BigQuery. Use SQL for analysis.

Question 19

Your retail organization stores sensitive application usage data in Cloud Storage. You need to encrypt the data without the operational overhead of managing encryption keys. What should you do?

A. Use Google-managed encryption keys (GMEK).

B. Use customer-managed encryption keys (CMEK).

C. Use customer-supplied encryption keys (CSEK).

D. Use customer-supplied encryption keys (CSEK) for the sensitive data and customer-managed encryption keys (CMEK) for the less sensitive data.

Question 20

You work for a financial organization that stores transaction data in BigQuery. Your organization has a regulatory requirement to retain data for a minimum of seven years for auditing purposes. You need to ensure that the data is retained for seven years using an efficient and cost-optimized approach. What should you do?

A. Create a partition by transaction date, and set the partition expiration policy to seven years.

B. Set the table-level retention policy in BigQuery to seven years.

C. Set the dataset-level retention policy in BigQuery to seven years.

D. Export the BigQuery tables to Cloud Storage daily, and enforce a lifecycle management policy that has a seven-year retention rule.